One of my favourite activities on a relaxed weekend morning is watching a couple of Veritasium and B1M videos. Last week Veritasium published a superb video on a [not so] simple Linux exploit, that could have had HUGE ramifications. If you haven’t seen it, go watch it, I’ll wait. It’s actually one of the most fascinating, yet little known stories in recent tech history, and it sits right at the intersection of many of the things that interest me; open source, trust, the humans behind the software, and just how fragile much of it really is.

A Lone Maintainer

If you CBA watching the video (seriously though, you really should, just put it on 1.5x!), here’s the short version. A lone developer called Lasse Collin maintained a compression library called “XZ Utils”, quietly and unpaid, for roughly twenty years. You’ve almost certainly never heard of it, which is basically the point. It sits underneath an enormous amount of critical infrastructure, including (most importantly) SSH, that millions of servers rely on every single day. Nobody thinks about it, nobody talks about it, and for most of its life exactly one person was keeping the lights on…

Lasse, who was already burned out and struggling with his mental health, was being hounded by “accounts” to make more progress on the project, some messages encouraging him to accept help and hand over responsibility to other devs. Then someone calling themselves “Jia Tan” showed up, which we now know was almost certainly a nation state operation, and spent two and a half years patiently social engineering their way into becoming a trusted maintainer of the project. They were helpful, responsive, wrote good code, etc. All seemed peachy! Enter Rich Jones, who works at RedHat, packaging Fedora. He began to trust Jia because… well, because Jia behaved exactly like the kind of person open source desperately needs. That’s what makes social engineering so effective; the good behaviour is the attack.

A Backdoor to the Internet

The backdoor they slipped in was technically brilliant and horrifying in equal measure, a hidden compromise buried in the compression library that would have given someone a backdoor to a significant chunk of the world’s servers if it had made it into stable releases across major Linux distros. The whole thing eventually unravelled because a single Microsoft developer called Andres Freund noticed that his SSH logins were taking half a second longer than they should and decided to dig into why. Five hundred milliseconds stood between us and a catastrophic supply chain compromise, and one curious engineer is the reason we caught it.

Open Source Matters

I’m pro open source, always have been. I’ve been using Linux since the 90s, which probably gives you an idea of what colour my beard is. The concept of “Software should be free and we’ll prove it works” is unarguably one of the greatest ever human endeavours. When geopolitical tensions seem to ratchet up weekly, where we’re supposedly retreating into blocs and borders, the fact that open source still works is genuinely remarkable and something to make you proud of bring an ape descendant! It’s proof that like-minded humans can can continue to collaborate on a global scale.

But here’s where we have a challenge… Linus’s Law says that given enough eyeballs, all bugs are shallow. That’s true for projects that actually have enough eyeballs, but popularity does not equal scrutiny. XZ Utils had millions (perhaps billions) of installs, but for most of its life basically one person was reading the code, and then there were two, and the second one was the attacker. (Yes, I know, hyperbole, but you get the point!). Downloads are not eyeballs, installs are not audits, and I think we’ve been confusing usage with oversight for a long time. The XZ story is a great example of where it went both brilliantly right and very wrong.

The real vulnerability here wasn’t technical; it was a system that let a single unpaid maintainer carry critical infrastructure on his back for two decades without meaningful support. We collectively built our digital world on top of someone’s volunteer labour and then acted surprised when that became an attack surface!

Will AI Make This Better or Worse?

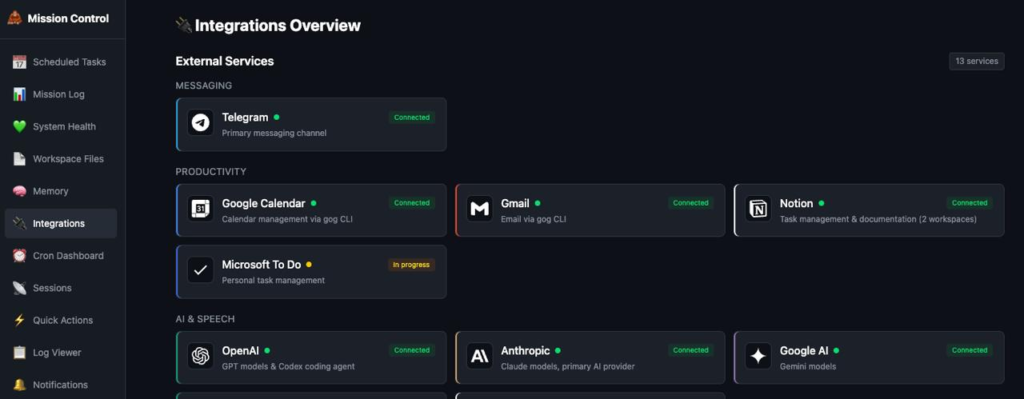

Which brings me to the point of this article.

Can AI help with this?

[… and yes, it’s AI again! You now have permission to roll your eyes about having read YAAIA, aka yet another AI article]

In theory, AI-powered code review could potentially spot the kind of obfuscated changes that slipped through here. Automated analysis tooling that never gets tired, never gets burned out, never feels social pressure to approve a commit because the submitter has been so helpful lately. That sounds very promising, but there’s another side to it. As more code gets written by AI and reviewed by AI, do we end up with even fewer human eyeballs on critical paths? Do we create a new kind of “enough eyeballs” fallacy where the eyeballs are all artificial and share the same blind spots?

I genuinely don’t know the answer, and I suspect the truth is that AI will simultaneously make some attacks harder and others easier [really helpful insight, I know!].

Interesting Times

What I do know is that the XZ story deserves to be told widely, especially within our industry. Absolutely not as a cautionary tale about open source, because closed source has its own risks and horror stories that just happen behind closed doors.

To me, it’s a reminder that the humans behind the code matter as much as the code itself. Fund the hard working maintainers, buy them a ko-fi, support the people doing unglamorous work, and maybe, occasionally, investigate when something takes half a second longer than it should.

As my favourite author of all time, Sir Terry Pratchett reminded us, “may you live in interesting times”. As the world tries to keep up with supply chain security, I can confirm interesting times are in the current sprint…

PS – Amusingly as I was writing this, another article on a similar topic hit the headlines, about FOSS repos. Worth a few minutes of your time too I reckon.

RSS – Posts

RSS – Posts